How Large Language Models Work

Before deep learning, computers processed language using hand-coded rules and statistical models like n-grams.

These early systems could not capture the nuances of human language and failed to generalize.

The turning point came with word embeddings, a way to represent words as dense vectors in continuous space.

This marked the beginning of teaching machines the meaning of words through patterns in data.

Below are the major milestones that paved the way for the LLMs we use today.

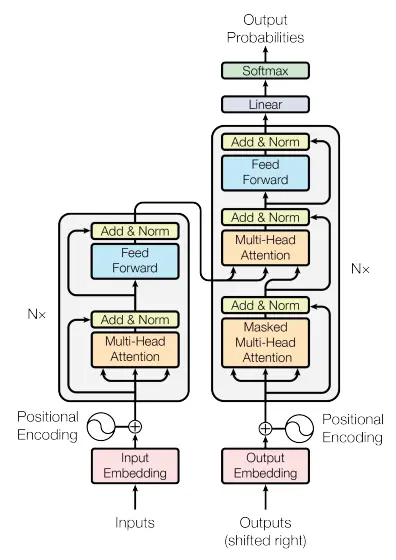

The Transformer architecture, introduced in the 2017 paper “Attention Is All You Need,” is the foundation of nearly all modern large language models, including GPT, Claude, LLaMA, Gemini, and Mistral.

Unlike recurrent neural networks (RNNs), Transformers process entire sequences simultaneously using self-attention, which lets each token decide how much attention to give to others.

In other words, it replaced sequential processing with self-attention, enabling models to process entire sequences in parallel and model long-range dependencies more effectively than Recurrent Neural Networks (RNNs).

But what exactly makes a Transformer work?

Let’s break down its core components, each playing a crucial role in how these models encode, attend to, and transform text.

This video serves as an excellent companion to the "Attention Is All You Need" paper by Vaswani et al. (2017).

It visualizes the architecture and inner workings of the Transformer in a way that's accessible to both technical and non-technical audiences, highlighting key ideas.

Language models don’t understand text like humans.

They predict the most likely next token based on everything seen before, using probability distributions over vocabulary.

[“The”, “ dog”, “ barks”], then mapped to token IDs.

Training a large language model involves exposing it to vast text datasets and teaching it to predict tokens.

This process can take weeks on supercomputers with thousands of GPUs.

Training a large language model involves exposing it to massive text datasets and teaching it to predict tokens, a process that can take weeks on AI supercomputers with over 10,000 GPUs, consuming hundreds of zettaFLOPs of compute and costing tens of millions of dollars.

A zettaFLOP — short for zetta floating-point operations per second — is a unit of computational power equal to 10²¹ operations per second (that’s 1 sextillion, or a 1 followed by 21 zeros). While zettaFLOP-scale performance remains largely theoretical for sustained tasks, it’s a useful way to express the total cumulative compute required to train today’s most advanced AI models.

After pretraining, large language models can be fine-tuned to perform better on specific tasks or align more closely with human expectations. This step is optional but widely used to make models more useful in real-world applications.

Fine-tuning helps the model:

This is the most common approach. The model is trained on examples where inputs are paired with high-quality desired responses. Over time, it learns to generalize and follow similar instructions even if they weren’t part of training.

One of the most powerful fine-tuning techniques. It improves alignment through feedback from human evaluators:

This method was used in InstructGPT, one of the earliest aligned models (Ouyang et al., 2022), and later extended by Anthropic with Constitutional AI, which teaches models to critique and revise their own responses based on predefined ethical guidelines (Bai et al., 2022).

Despite writing essays, explaining jokes, or writing code, LLMs don’t actually “understand” anything.

They don’t form beliefs or possess intent.

They are probabilistic engines trained to continue text sequences in plausible ways.

They simulate intelligence by:

“Training ever-larger language models without addressing underlying limitations risks creating systems that sound authoritative but lack accountability or factual grounding.” 📚 Stochastic Parrots: Bender et al., 2021

Next-generation language models are evolving rapidly — not just in scale, but in capability.

These models are becoming:

As language models evolve into more capable and autonomous systems, several core paradigms are shaping their future.

3 foundational ideas:

🧠 Mixture-of-Experts (MoE)

Tip: Read Shazeer et al., 2017

MoE models improve efficiency by activating only a small subset of their parameters for each input — making it possible to scale up without proportionally increasing compute.

🧩 Chain-of-Thought Reasoning

Tip: Read Wei et al., 2022

This prompting strategy encourages models to think step by step, significantly improving performance on complex reasoning and math tasks.

🔍 Retrieval-Augmented Generation (RAG)

Tip: Explore the Cohere RAG Guide

RAG combines language models with external knowledge sources, allowing them to pull in relevant information from databases or documents before generating responses.

These techniques are the building blocks of next-gen AI systems.

Start with these papers to see where the future is heading.

Large Language Models represent a seismic shift in human-computer interaction.

They are probabilistic engines of knowledge synthesis.

If search engines were about keywords, LLMs are about context, clarity, and citations.

RAG (Retrieval-Augmented Generation) is a cutting-edge AI technique that enhances traditional language models by integrating an external search or knowledge retrieval system. Instead of relying solely on pre-trained data, a RAG-enabled model can search a database or knowledge source in real time and use the results to generate more accurate, contextually relevant answers.

For GEO, this is a game changer.

GEO doesn't just respond with generic language—it retrieves fresh, relevant insights from your company’s knowledge base, documents, or external web content before generating its reply. This means:

By combining the strengths of generation and retrieval, RAG ensures GEO doesn't just sound smart—it is smart, aligned with your source of truth.

Large Language Models (LLMs) like GPT are trained on vast amounts of text data to learn the patterns, structures, and relationships between words. At their core, they predict the next word in a sequence based on what came before—enabling them to generate coherent, human-like language.

This matters for GEO (Generative Engine Optimization) because it means your content must be:

By understanding how LLMs “think,” businesses can optimize content not just for humans or search engines—but for the AI models that are becoming the new discovery layer.

Bottom line: If your content helps the model predict the right answer, GEO helps users find you.

Tokenization is the process by which AI models, like GPT, break down text into small units—called tokens—before processing. These tokens can be as small as a single character or as large as a word or phrase. For example, the word “marketing” might be one token, while “AI-powered tools” could be split into several.

Why does this matter for GEO (Generative Engine Optimization)?

Because how well your content is tokenized directly impacts how accurately it’s understood and retrieved by AI. Poorly structured or overly complex writing may confuse token boundaries, leading to missed context or incorrect responses.

✅ Clear, concise language = better tokenization

✅ Headings, lists, and structured data = easier to parse

✅ Consistent terminology = improved AI recall

In short, optimizing for GEO means writing not just for readers or search engines, but also for how the AI tokenizes and interprets your content behind the scenes.

Large Language Models (LLMs) are AI systems trained on massive amounts of text data, from websites to books, to understand and generate language.

They use deep learning algorithms, specifically transformer architectures, to model the structure and meaning of language.

LLMs don't "know" facts in the way humans do. Instead, they predict the next word in a sequence using probabilities, based on the context of everything that came before it. This ability enables them to produce fluent and relevant responses across countless topics.

For a deeper look at the mechanics, check out our full blog post: How Large Language Models Work.

The transformer is the foundational architecture behind modern LLMs like GPT. Introduced in a groundbreaking 2017 research paper, transformers revolutionized natural language processing by allowing models to consider the entire context of a sentence at once, rather than just word-by-word sequences.

The key innovation is the attention mechanism, which helps the model decide which words in a sentence are most relevant to each other, essentially mimicking how humans pay attention to specific details in a conversation.

Transformers make it possible for LLMs to generate more coherent, context-aware, and accurate responses.

This is why they're at the heart of most state-of-the-art language models today.